T-sne is a Latent Space visualization / dimensionality reduction technique particularly well-suited for high-dimensional data. It focuses on preserving local structures in the data, making it effective for visualizing clusters and patterns.

In Layman’s terms, it does this by computing the nearest neighbors of each point in the high dimensional space, and attempting to fine tune a model in the low dimensional space that matches these distances, such that points that were close in high-dim space remain close in low-dim space. The main difference between this (SNE) and t-SNE is that t-SNE uses a heavy-tailed distribution (Student t-distribution) in the low-dimensional space to better handle the “crowding problem”, where points tend to cluster too closely together. In addition, it makes the algorithm more efficient to compute(however, still extremely computationally expensive for large datasets).

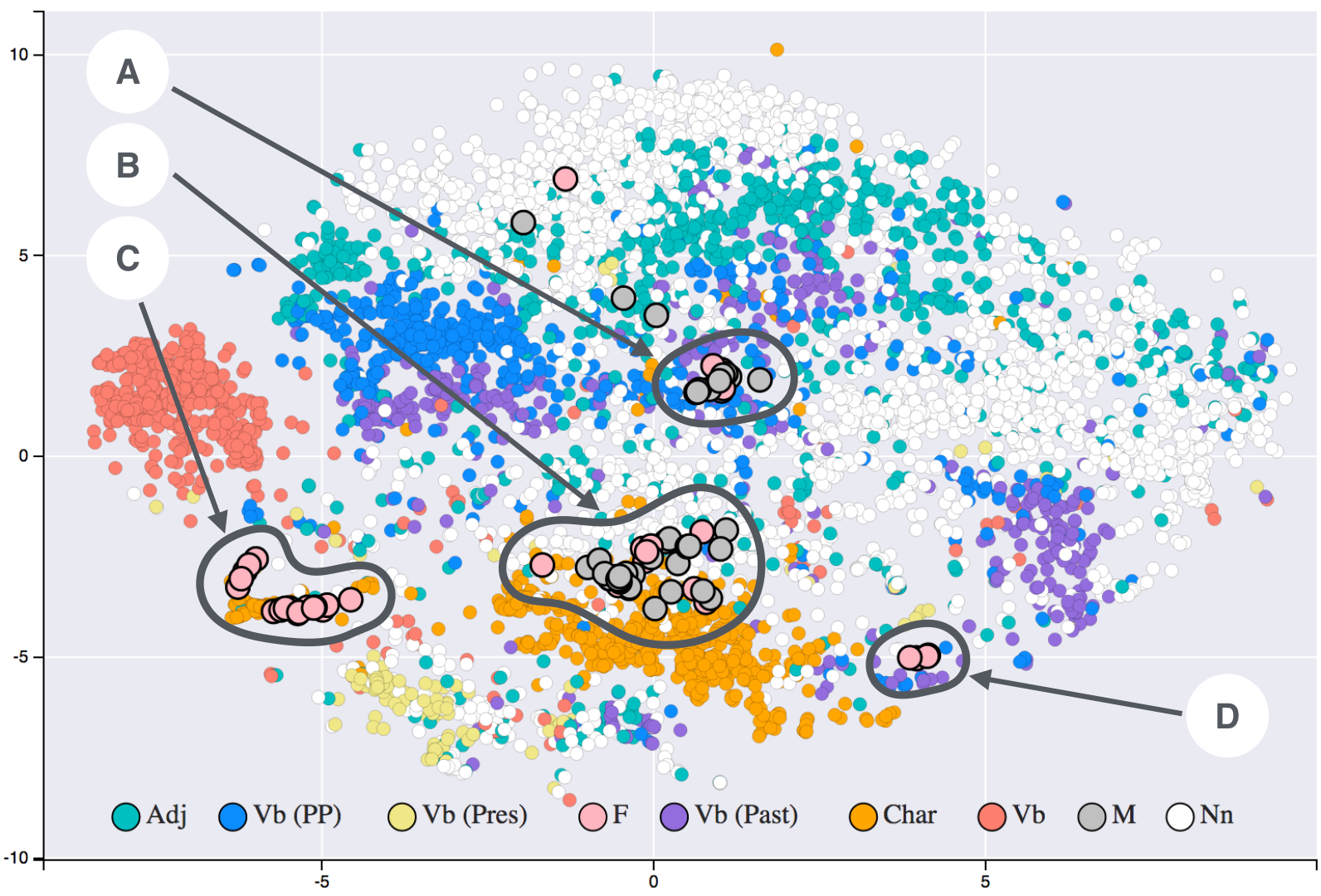

T-SNE applied to a 19th century word embeddings dataset, showing how words with similar meanings cluster together.

Formulation

High-Dim Similarities

T-SNE first computes pairwise similarities in the original high-dimensional space using a Gaussian distribution. The conditional probability that point would pick as its neighbor is:

where is the variance of the Gaussian centered on . This variance is determined by the perplexity parameter.

To make the similarity metric symmetric, we use:

Low-Dim Similarities

In the low-dimensional map, t-SNE uses a Student t-distribution with one degree of freedom (also known as the Cauchy distribution). This heavy-tailed distribution is key to avoiding the “crowding problem”:

where and are the low-dimensional representations of and .

Optimization Objective

T-SNE minimizes the Kullback-Leibler divergence between these two distributions:

The gradient of this cost function with respect to the low-dimensional points is:

This gradient is optimized using gradient descent with momentum.

Perplexity and

The perplexity is related to the entropy of the conditional probability distribution:

For each point , a binary search finds the that produces the desired perplexity. This means each point effectively has a different “bandwidth” depending on the local density of the data.

Intuition

How T-SNE works can be understood in two main steps:

- Build a probability distribution over pairs of high-dimensional objects in such a way that similar objects have a high probability of being picked, while dissimilar points have an extremely small probability of being picked.

- Then, define a similar probability distribution over the points in the low-dimensional map, and minimize the Kullback-Leibler divergence between the two distributions with respect to the locations of the points in the map.

A key parameter in T-SNE is perplexity, which can be thought of as a smooth measure of the effective number of neighbors. It influences how the algorithm balances attention between local and global aspects of the data. Lower perplexity values focus more on local structures, while higher values capture broader patterns.

The different perplexity values 5-100 and how they change the depiction of the data.

Limitations

While a great approximation for many dimension reduction tasks, T-SNE has a few key issues. Namely, it is completely non-deterministic, and is unable to generalize to new data like Principle Component Analysis. It is a “trained” model in the sense that it learns this data distribution specifically, and that also comes with the downside of computational complexity, . In addition, many features of the visualization have no inherent meaning, namely that the distances between different clusters hold no value, and the cluster sizes themselves are arbitrary

When To Use What

Use t-SNE when: You want to visualize high-dimensional data with focus on local structure and clustering patterns.

Use PCA when: You need deterministic results, global structure preservation, or the ability to transform new data.

Use UMAP when: You want fast computation with both local and global structure preserved and the ability to transform new data.