The Latent Space is a broadly used term to describe the high dimensional nature of data representations in machine learning models, particularly in deep learning. It refers to an abstract multi-dimensional space where complex data structures are encoded into simpler, more manageable forms.

A latent space is achieved through techniques such as autoencoders, Variational Autoencoders, and generative adversarial networks (GANs). These models learn to compress input data into a lower-dimensional representation (the latent space) and then reconstruct the original data from this representation.

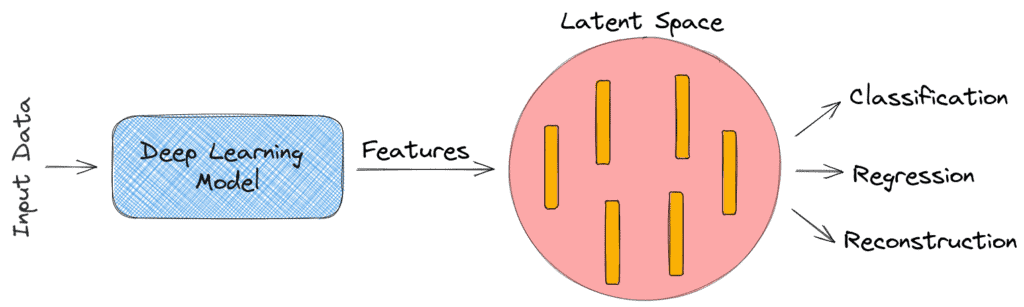

Think of it as a feature extraction process, where the model identifies the most important characteristics of the data and represents them in a way that captures the underlying patterns and relationships. This allows for efficient data manipulation, generation, and analysis.