While quite a simple function, the softmax function is a crucial component in many machine learning models, particularly in classification tasks. It converts a vector of raw scores (logits) into probabilities that sum to one, making it suitable for multi-class classification problems.

It is defined as follows:

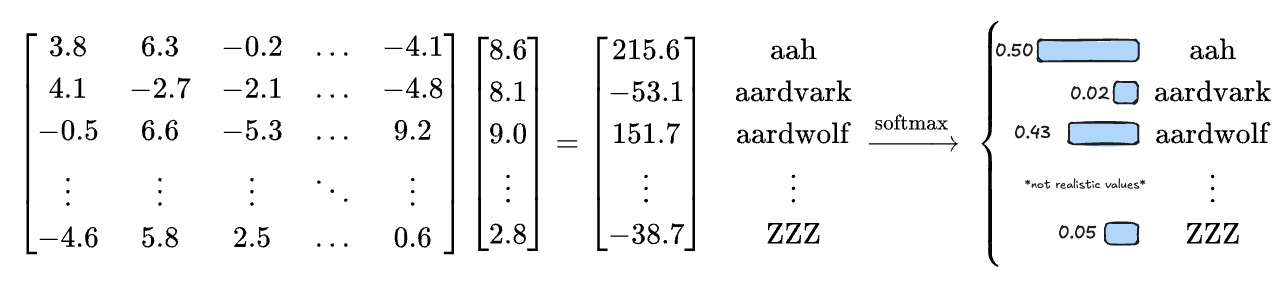

For Large Language Models, the softmax function is often used in the output layer to convert the logits into probabilities for each token in the vocabulary, allowing the model to predict the next token based on the highest probability: