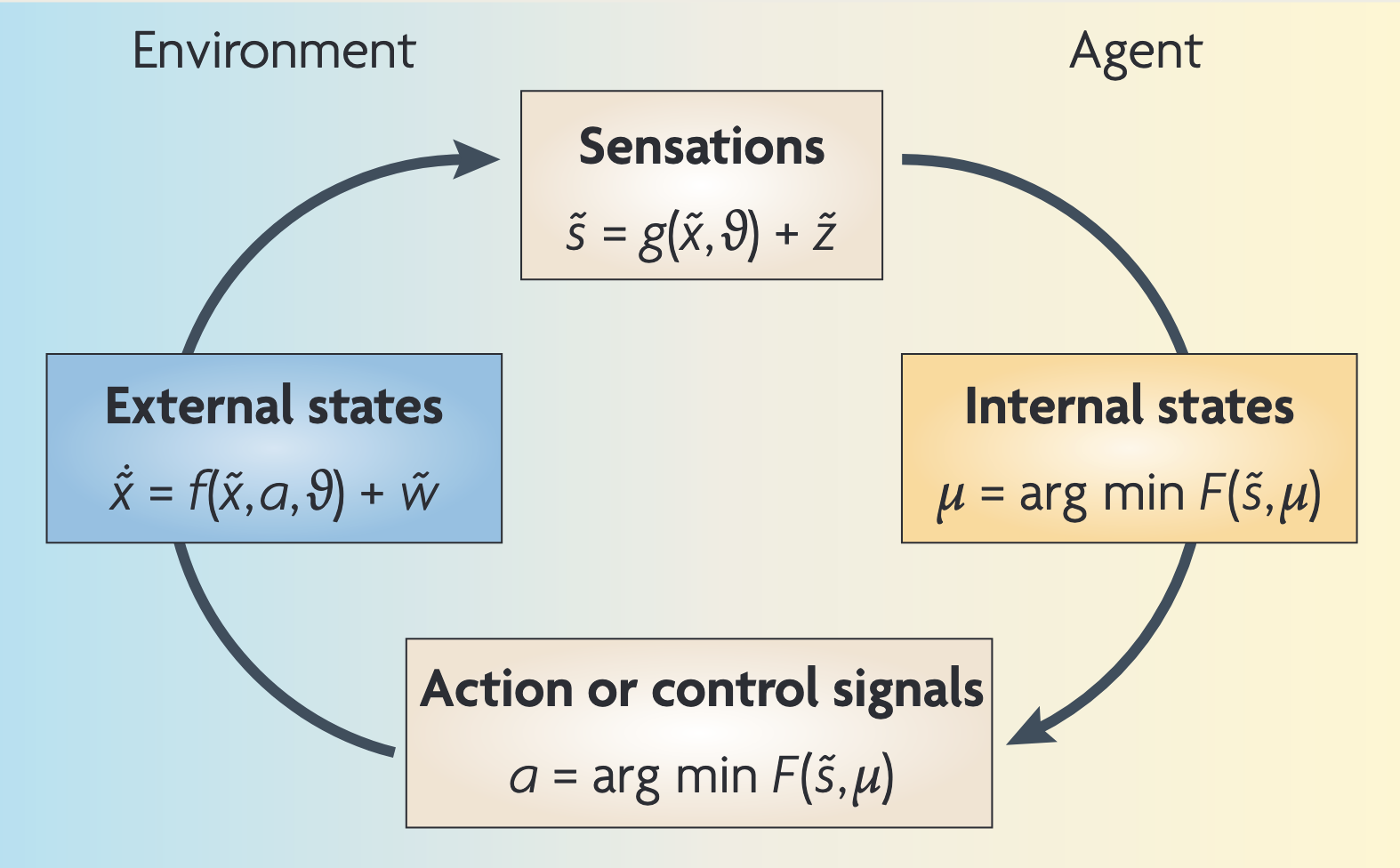

The Free Energy Principle is a mathematical principle borrowed from information theory. It theorizes that the universal goal of living systems is to minimize a quantity of “free energy” in order to maintain their existence in a dynamic world. (Friston, 2010)

The “free energy” quantity can be though of as an Isomorphism to the idea of “Entropy” as a quantity in Information Theory, in combination with approaches from Bayesian Inference.

This is already sort of the case for Backward Propagation based machine learning models, which are tasked with minimizing a Loss Function as their quantity. However, their approach is more narrow in scope, as they are only trying to minimize loss for a specific task, rather than maintaining their overall existence.

Think of the example of identifying an image of a predator like a tiger. A machine learning model trained to identify tigers will minimize its loss function when it correctly identifies the tiger in an image. However, this does not necessarily contribute to the model’s overall survival or adaptability in a changing environment.

Bibliography

- Friston, K. (2010). The Free-Energy Principle: A Unified Brain Theory? Nature Reviews Neuroscience, 11(2), 127–138. https://doi.org/10.1038/nrn2787