A Kalman Filter is a tool for incorporating noisy measurements over time to estimate the true state of a dynamic system. It is widely used in control systems, robotics, and signal processing.

An Underlying Dynamical System Model

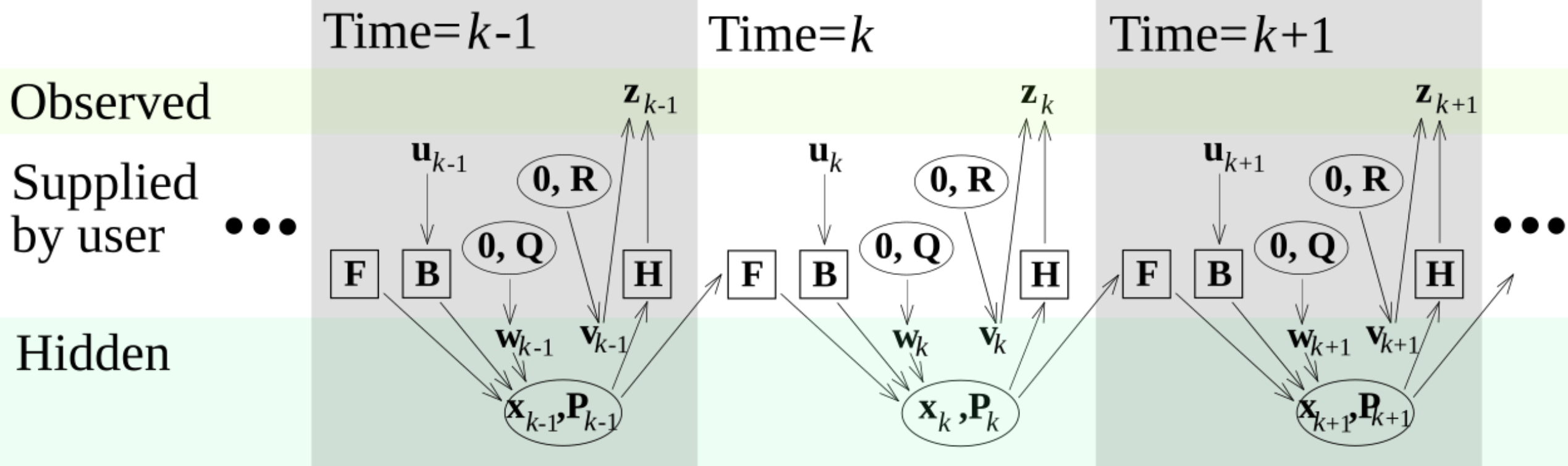

A great way to understand the Kalman Filter is to think of it as a hidden Markov model where the hidden states evolve over time according to a linear dynamical system, and the observations are noisy measurements of these states.

Model underlying the Kalman filter. Squares represent matrices. Ellipses represent multivariate normal distributions (with the mean and covariance matrix enclosed). Unenclosed values are vectors. For the simple case, the various matrices are constant with time, and thus the subscripts are not used, but Kalman filtering allows any of them to change each time step.

Where:

- is the state transition model

- is the observation model

- is the Covariance of the process noise

- is the Covariance of the observation noise

The Kalman Filter Algorithm

The Kalman Filter operates in two stages: predict and update. At each time step , we maintain an estimate of the state and its uncertainty (covariance) .

Prediction Step

First, we predict the next state based on the system dynamics:

Where:

- is the a priori state estimate at step given observations up to

- is the a priori estimate covariance

- is the control input model (often omitted if no control input )

Update Step

When we receive a measurement , we update our estimate:

First, compute the Kalman gain:

Then update the state estimate:

And the covariance:

Where:

- is the Kalman gain, determining how much to trust the measurement

- is the innovation or measurement residual

Intuition

From a Bayesian point of view

The Kalman Filter is the optimal Bayesian filter for linear-Gaussian systems. At each step, we are looking to find:

Where:

- Prior: comes from the prediction step

- Likelihood: is the observation model

- Posterior: is our updated belief after seeing

The product of any two gaussians will always be a gaussian, so we can compute the closed form solution

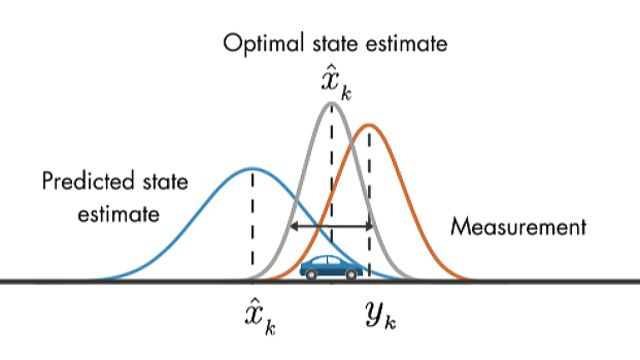

The Kalman Gain Intuition

The Kalman gain balances trust between our prediction and the measurement:

- If measurement noise is small ( small): is large → trust the measurement more

- If prediction uncertainty is small ( small): is small → trust the prediction more

The update equation can be read as:

“Start with our prediction, then correct it proportionally to how surprising the measurement was.”

Optimality

The Kalman Filter minimizes the mean squared error of the state estimate. It is provably optimal for linear systems with Gaussian noise. The covariance matrix tracks our uncertainty, which typically decreases as we incorporate more measurements.

1D Example: Tracking Position

Consider a simple scenario: estimating the position of an object moving at constant velocity, given noisy position measurements.

Setup

State:

Dynamics (constant velocity):

where represents process noise.

Observation (we only measure position):

where is measurement noise.

Numerical Example

Let’s say:

- second

- Process noise:

- Measurement noise: (quite noisy!)

- True trajectory: object starts at position 0, moving at 1 m/s

Initial state: , (very uncertain)

Time step 1:

Predict:

Suppose we measure (true position is 1, but measurement is noisy).

Update:

- Innovation:

- Innovation covariance:

- Kalman gain:

- Updated state:

Notice: the filter not only updated the position estimate but also inferred the velocity!

For this to work we will need to update the predict step with the newly balanced dynamical system equation: