SVMs are good generalization classifiers both in theory and in practice. Particularly works well with few samples and high dimensional data.

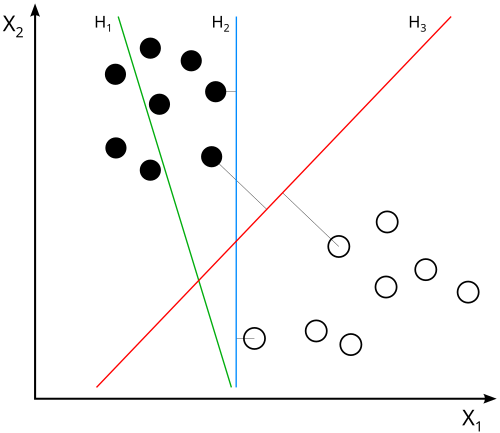

The goal of the SVM algorithm is to find a hyperplane in an N-dimensional space (N — the number of features) that distinctly classifies the data points.

In plain english, they seek to find a “road” in the feature space that separates the two classes of data points with the largest possible margin. Not just a infitesimally thin line, but a wide road that gives some buffer room. Then, new data points can be classified based on which side of the road they fall on. Also allows for a “noise margin” where some points can be on the wrong side of the road if necessary.

does not separate the classes. does, but only with a small margin. separates them with the maximal margin.