Decision Trees are known for producing robust, interpreable cateogories/splits in which data is classified.

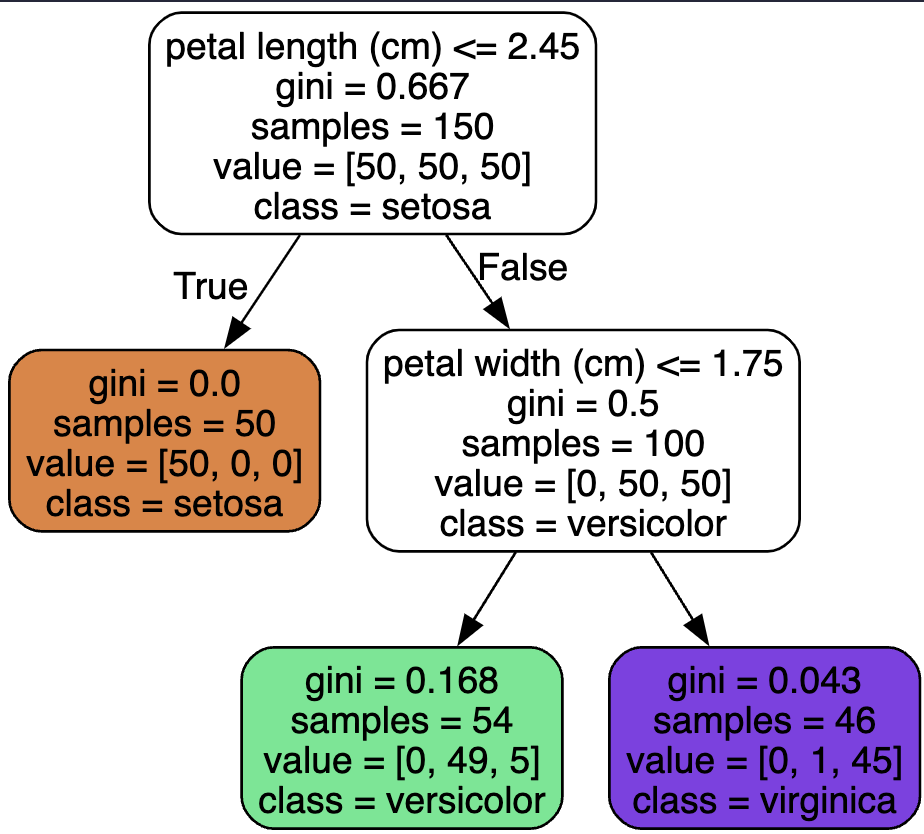

Here, the top box is the root node, containing all the data.

Any nodes at the very bottom are considered the leaves. Any in between are called split nodes.

GINI Impurity

Where:

- is the impurity of the node

- is the ration of class instances among the training instances in the node

If there are two classes in the node and 100 instances, then: